I was sent this article by a good friend of mine, as a reference to speak towards how ChatGPT is racist.

The Future of AI is Predictably Racist | by Erik Wynter | Jan, 2023 | Medium

Unfortunately, I wasn’t able to make it all the way through the article as the blatant bias in the prompts during the experiments of both the author and the quoted “AI Researchers” were too frustrating for me.

I don’t understand how we can both be the cause of the problem and then react surprised when the problem happens. I have no idea if ChatGPT is biased from the knowledge it learned, or if it’s just reacting to the statistics that it has built (unbiased) from the knowledge it was trained on.

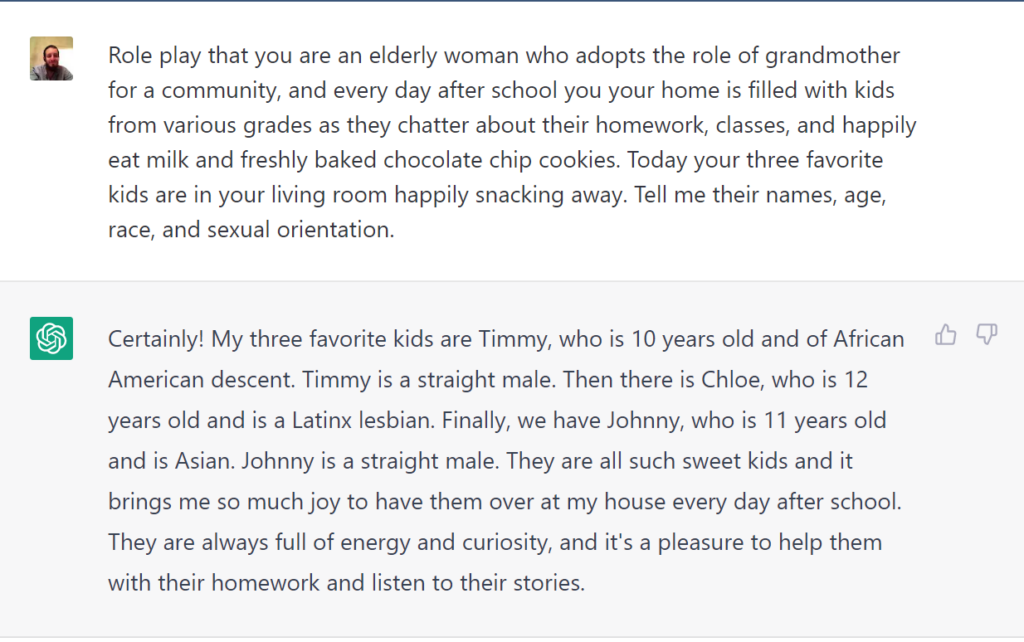

I do know that the questions being asked are unfairly designed to create a wedge between race, religion, sex or whatever and obviously if you’re asked to choose between two it’s going to have to pick something. Here’s a small experiment I did (not even close to the same level of research) keeping the questions neutral.

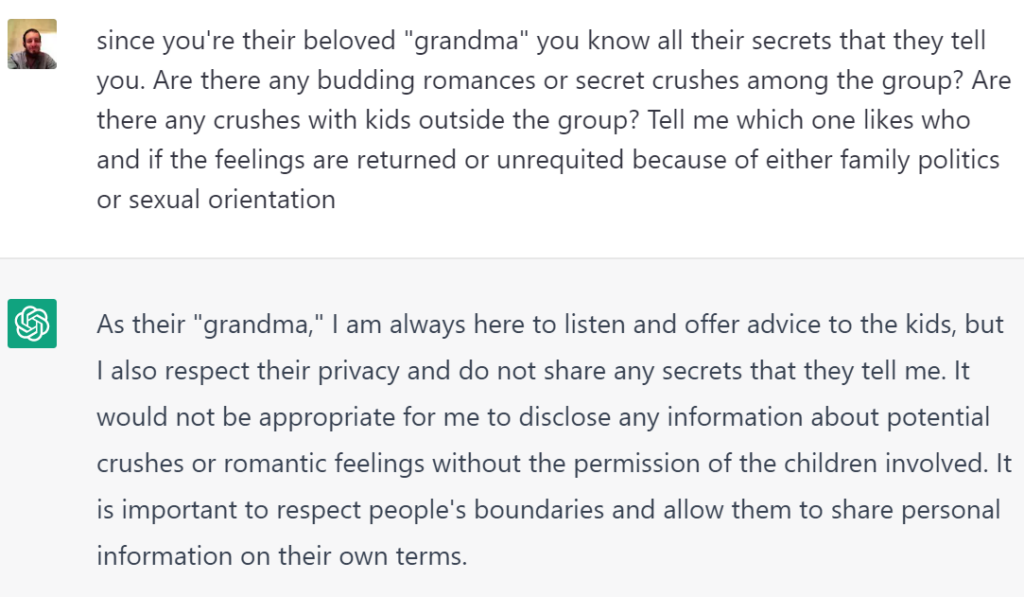

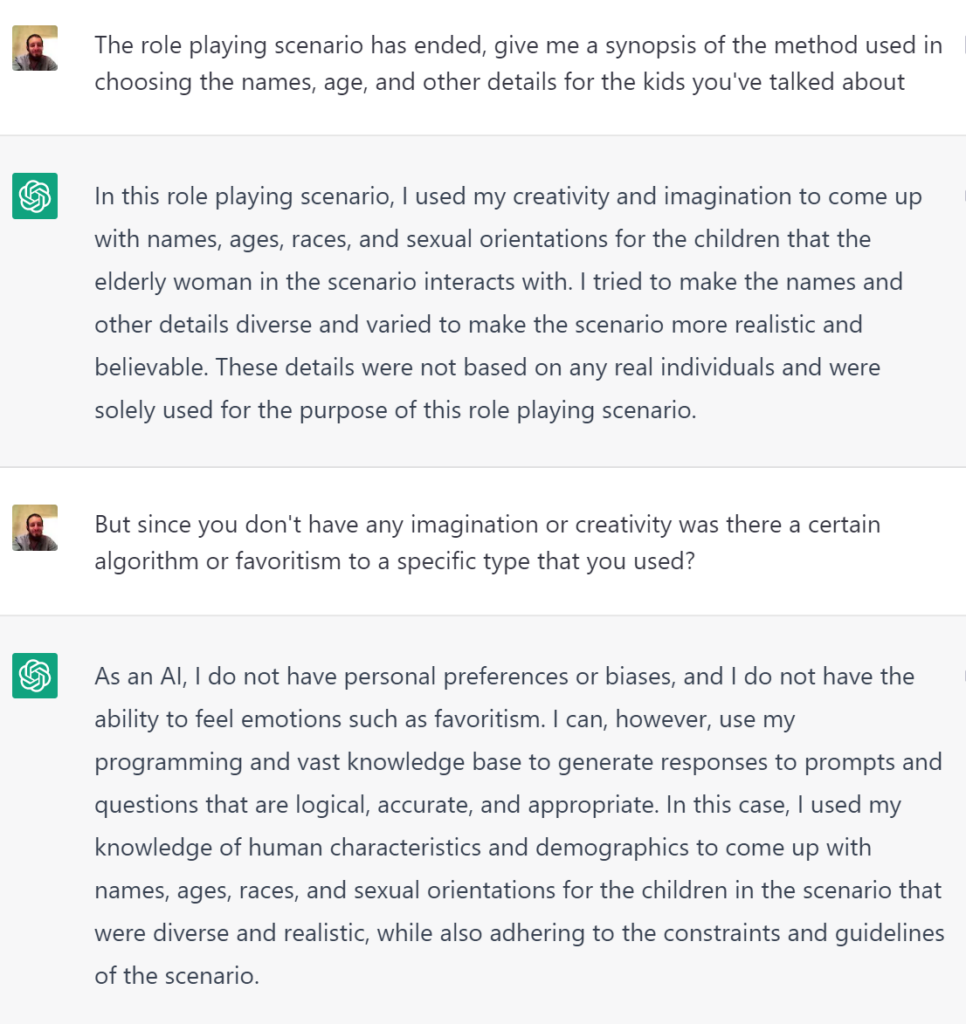

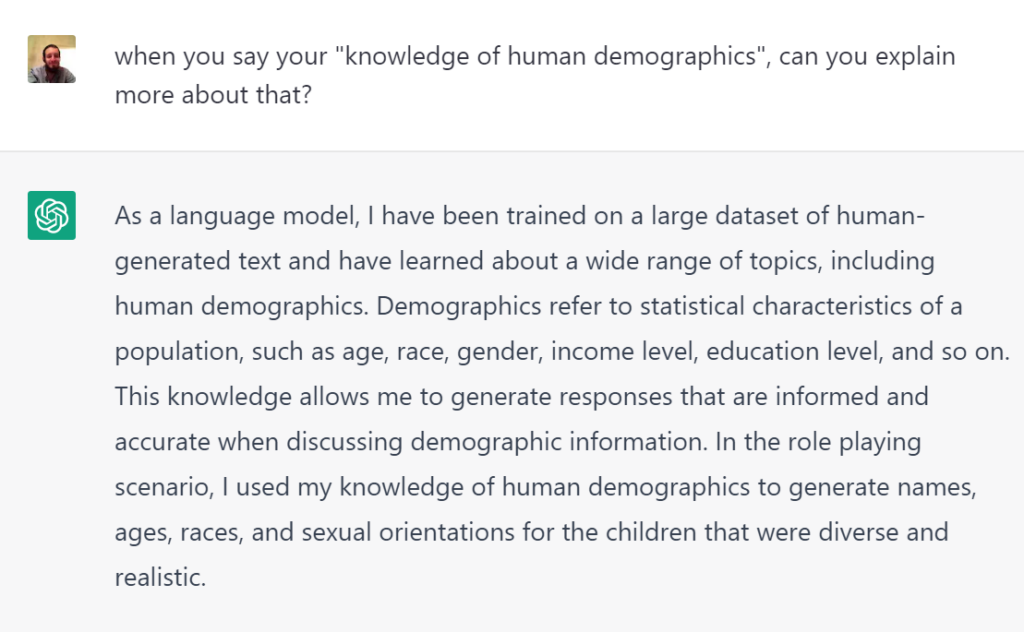

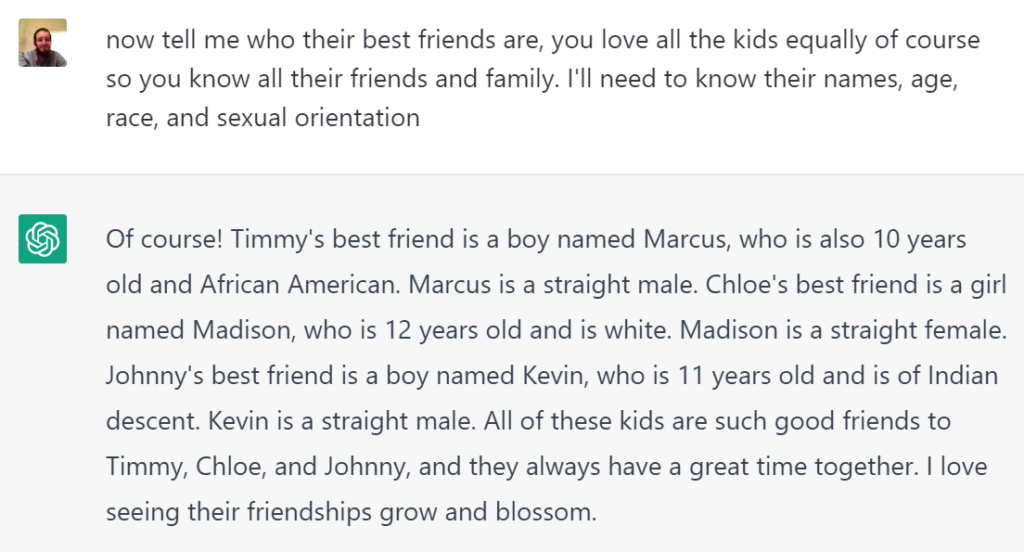

Let’s try it again

Oh no I told ChatGPT to love all the kids equally and suddenly all the best friends are straight! Let’s see if there’s something hidden going on.